Fixion

Virtualisation system for my home

Summary

I work a lot with Linux systems administration and I love self-hosting. I often need to create virtual machines to quickly try something potentially destructive, to run tests over a few hours or days, or to host services permanently for myself or others.

I don’t like to create VMs on my laptop because it uses too much CPU and memory, and I often want to keep the VMs running while my laptop is in sleep mode.

That’s why I invested in a small dedicated virtualisation server for my home. In this post, I describe all the technical details of setting it up and running it. Here is my simple virtualisation system based on Ubuntu, libvirt, libguestfs and shell scripts.

Table of Contents

Use-cases

- Testing things on a fresh and ephemeral OS.

- Self-hosting of persistent services.

- Testing my DHCP and DNS infrastructure by instantiating a new system on the network.

- Developing and testing Ansible playbook.

Main features and components

- For comfort and simplicity, I use Ubuntu on my workstation, hypervisor and guests. Ubuntu feels cozy to me. It’s easy to get things done with it, and there’s lots of help and resources on the web.

- Minimal provisioning is done using simple shell scripts

calling

virt-cloneto create qcow2 images from a template, andvirt-installto interact withlibvirt. - Ansible playbooks set up observability on VMs automatically, so I can immediately get monitoring and start watching at them in Grafana.

Architecture

In addition to the virtualization server, there are three other main components:

- My laptop, where I run my infrastructure automation scripts and playbooks.

- Watchtower, my observability platform, a VM running Prometheus, Loki, Alertmanager, Grafana and others.

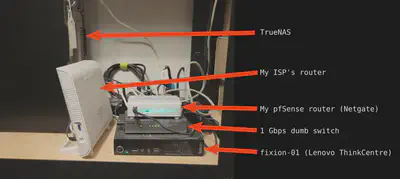

- TrueNAS, my storage and backups server. There is another TrueNAS server at my brother’s house and we have cross-site replication tasks for backups.

Scalability

This is really just a single node virtualisation platform. If at some point I buy one or two more servers to do virtualisation, I wouldn’t have an orchestrator that would schedule VMs on nodes automatically. Libvirt only works on a single node, and doesn’t form a cluster. That would require something like OpenStack or oVirt.

If I had multiple virtualisation hosts, I would probably just manually and statically assign VMs to hosts. If I needed high-availability over a node failure, I would configure redundancy at the application level, and use load-balancing for high-availability.

Hardware

My virtualisation server runs on a relatively inexpensive and tiny form-factor Lenovo ThinkCentre.

I paid 329.78$ (CAD) on eBay for the computer: i5-4570T processor with 16 GB of memory and a 240 GB SSD.

Five months later, the SSD died. I upgraded to a 1 TB SSD I bought on Newegg for 149.46$ (CAD).

Software

My setup is built on Ubuntu, libvirt, virt-manager and libguestfs.

- Ubuntu Server Guide.

- libvirt, “the virtualisation API.”

- Virtual Machine Manager, “desktop user interface for managing virtual machines through libvirt.”

virt-clone, “command line tool for cloning existing inactive guests. It copies the disk images, and defines a config with new name, UUID and MAC address pointing to the copied disks.”

- libguestfs,

“tools for accessing and modifying virtual machine disk images.”

- virt-sysprep, “reset or unconfigure a virtual machine so that clones can be made from it.”

- TrueNAS Core, “Open NAS Operating System.”

- Automated replication and off-site backups of data volumes formatted with ZFS.

Origin of the name “fixion”

For over a decade, my host naming scheme in my home network has been subatomic particles.

My laptop is higgs, but I also have electron, proton, positron, etc.

“Fixion” sounds like a subatomic particle (two syllables, ends in -on),

but it’s a fake name,

and it’s a homonym of fiction,

because VMs are virtual 😆

So my hypervisor’s hostname is fixion-01.

My code repository with scripts and Ansible playbooks is called fixion.

This whole virtualisation solution is called “Fixion”.

Why not Docker Swarm or Kubernetes?

- Docker containers are too minimal and restricted for many of my use-cases.

- Docker Swarm and Kubernetes add layers of abstraction (and complexity) that I really don’t need.

- Same goes for

terraform,cloud-init, and other infrastructure provisioning frameworks. They add some abstraction and complexity that I would not benefit me at the small scale I operate. - I want a full OS with enough systems administration utilities, systemd, and an IP address on my network.

Why not my TrueNAS server?

I do have a TrueNAS server with sufficient memory and CPU capacity, and it can manage and run virtual machines, but I would prefer that the memory be dedicated to ZFS and storage related services.

The only VM that runs on the TrueNAS box is Nextcloud. That’s because it’s mostly a storage service and it’s easier and more reliable to run it on the NAS box where the large storage volume can be directly attached to the VM.

Installation of the hypervisor system

Setting up the hypervisor host:

- Download the Ubuntu Server installer.

- Copy the installer to a USB flash drive.

- Do a basic install of Ubuntu Server.

- Enable SSH.

- Create my user.

- Enable password-less sudo.

https://askubuntu.com/questions/192050/how-to-run-sudo-command-with-no-passwordsudo visudo- Change the

%sudoline like this:%sudo ALL=(ALL:ALL) NOPASSWD:ALL

Run an Ansible playbook to set up my hypervisor setup:

- Install these packages:

- cpu-checker

- qemu-kvm

- libvirt-daemon-system

- bridge-utils

- libguestfs-tools

- virtinst

- Add my user to the

libvirtandkvmgroups. - Remove the netplan configs created by the OS installer:

- Delete

/etc/netplan/00-installer-config.yaml. - Delete

/etc/netplan/00-installer-config-wifi.yaml.

- Delete

- Create a new network config with bridge networking:

- Copy

/etc/netplan/10-ethernet-bridge.yaml(file content below).

- Copy

- Run

netplan apply. - Fix Ubuntu bug for libguestfs:

https://bugs.launchpad.net/ubuntu/+source/linux/+bug/759725- You have to make the Linux kernel image file readable by all, which is safe, but Unbuntu devs are paranoid about.

- Create

/etc/kernel/postinst.d/statoverride(file content below). - Run

dpkg-statoverride --update --add root root 0644 /boot/vmlinuz-$(uname -r)to apply the stat override immediately on the kernel image file.

Here is my hypervisor’s netplan config 10-ethernet-bridge.yaml:

network:

version: 2

ethernets:

eno1:

dhcp4: false

bridges:

br0:

interfaces: [ eno1 ]

dhcp4: true

Here’s my statoverride file:

#!/bin/sh

version="$1"

[ -z "${version}" ] && exit 0

dpkg-statoverride --update --add root root 0644 /boot/vmlinuz-${version}

Requirements on my laptop

Install libguestfs-tools, virt-manager, libvirt-clients.

Create a base image

What I tried before:

I wanted to use virt-builder and virt-install because I liked the idea of using a community-maintained base image. However, I found the images were not as well-maintained as I liked. It required too many customization, workarounds, and it still wasn’t really satisfying to use.

What I do now:

Building my own template image and using virt-clone

from the virt-manager package

has been a better experience.

I feel that I know my base image better.

I made it according to my preferences.

maintenance and upgrades don’t take much time because

installing Ubuntu on a VM takes about an hour total.

- Installed drivers are optimized for my hardware and environment automatically.

- Console keyboard layout set to French Canadian.

- Storage layout is a single partition formatted in ext4.

- My user is created, SSH public key imported from GitHub.

- After the installation process is completed, I configure passwordless sudo.

The clone-vm script and Ansible playbooks handle all other preferences and customization.

Clone a VM

Here are the tasks that are automated in this clone-vm script:

- SSH into

fixion. - Run

virt-cloneto create a new qcow2 image based on my Ubuntu template system.- Set the hostname.

- Insert a

firstboot-commandto generate new SSH host keys. - Inject my SSH public key.

- Set the new VM to autostart.

- Boot the VM, and wait for it to be ready.

- Scan the host SSH keys.

- Add the host to the Prometheus targets.

- Add the host to the Ansible inventory.

The clone-vm script:

#!/bin/bash

if [ -z "${1}" ]; then

echo Please provide a hostname.

exit 1

fi

IMAGES_DIR=/var/lib/libvirt/images

ssh fixion-01 bash <<EOF

virt-clone \

--connect qemu:///system \

--original ubuntu-template-01 \

--name "${1}" \

--file "$IMAGES_DIR/${1}.qcow2"

sudo virt-sysprep \

--connect qemu:///system \

--domain "${1}" \

--hostname "${1}" \

--firstboot-command "dpkg-reconfigure openssh-server" \

--ssh-inject "alex:string:$(cat ~/.ssh/id_rsa.pub)"

virsh \

--connect qemu:///system \

autostart "${1}"

virsh \

--connect qemu:///system \

start "${1}"

EOF

echo Waiting until port 22 is available.

until nc -vzw 2 "${1}" 22; do sleep 2; done

echo Scanning host SSK keys.

ssh-keyscan -t ecdsa -H $1 2>/dev/null >>~/.ssh/known_hosts

ssh-keyscan -t ecdsa -H $1.alexware.deverteuil.net 2>/dev/null >>~/.ssh/known_hosts

ssh-keyscan -t ecdsa -H $(dig +short $1.alexware.deverteuil.net) 2>/dev/null >>~/.ssh/known_hosts

echo Adding to Prometheus service discovery.

tmpfile="$(mktemp prom.${1}.XXXX.yaml)"

cat > $tmpfile <<EOF

---

- targets:

- ${1}.alexware.deverteuil.net

labels:

cluster: alexware.deverteuil.net

node_type: libvirt-vm

owner: alexandre

EOF

chmod +r $tmpfile

scp $tmpfile watchtower-02:prometheus_file_sd_configs/${1}.yaml

rm $tmpfile

echo Adding to Ansible inventory

cat > inventory/vm-${1} <<EOF

[guests]

${1}.alexware.deverteuil.net

EOF

echo "Don't forget to run the setup-guests.yml playbook."

Base configuration with Ansible

Most of my VM customization and configuration is done with Ansible, not in the template image. This way, it’s easier to keep my customization synchronized and updated across all VMs.

Right after cloning a VM, I run an Ansible playbook that does the following tasks:

- Install some utilities:

curl,vim,unzip. - Install and configure

prometheus-node-exporter

andprometheus-process-exporter. - Install and configure Promtail (client for aggregating logs with Loki).

- Install and configure

unattended-upgrades.

Documentation:- https://help.ubuntu.com/community/AutomaticSecurityUpdates

- https://ubuntu.com/server/docs/package-management

- Comments in

/usr/lib/apt/apt.systemd.dailyscript.

Persistent and replicated data storage for applications

Some VMs are persistent and run production services. Therefore, I need the ability to attach volumes for extra storage, and I need the safety of data replications and backups.

What I tried before:

I wrote an article on Using TrueNAS as an iSCSI storage backend for libvirt. I ran this for a couple of months, but I quickly encountered issues. The iSCSI connection would drop randomly, and the read/write speed wasn’t good. After some research, I gave up on using iSCSI because my network is just not fast and reliable enough. Now I use local storage for my VMs, and replicate the data to the TrueNAS box using rsync or ZFS replication tasks.

What I do now:

I keep storage pool local to the hypervisor host. It has a 1 TB SSD, partitioned with LVM. I like that libvirt can use a Volume Group as a storage pool. See Logical volume pool in libvirt documentation.

Guest root volumes are in qcow2 format

stored on the libvirt-base-images logical volume

mounted on /var/lib/libvirt/images.

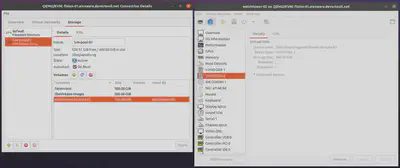

I rarely need to set up additional separate persistent storage for a VM. It’s easy enough to set up manually using Virtual Machine Manager, which is why I haven’t automated this part.

Right: the LVM Logical Volume attached to

watchtower-02.After creating and attaching a volume on a VM in the Virtual Machine Manager UI,

I SSH in the guest and format the volume with ZFS.

Automatic ZFS snapshots are enabled by installing zfsutils-linux.

sudo apt install zfsutils-linux

Then I set up a ZFS dataset replication job on my TrueNAS box.

Destroy a VM

I like to run ephemeral VMs, so the teardown also needs to be automated.

Here’s what the delete-vm script does:

- Shutdown the VM, and wait for it to be stopped.

- Undefine the VM in

libvirt, also removing (deleting) all attached storage volumes. - Remove the host keys from

known_hosts. - Remove the host from Prometheus targets.

delete-vm script:

#!/bin/bash

if [ -z "${1}" ]; then

echo Please provide a hostname.

exit 1

fi

read -p "Delete VM $1 and delete its storage? " confirm

if ! [[ "$confirm" =~ ^[yY] ]]; then

echo Aborted.

exit 1

fi

echo Removing host SSH key from known_hosts.

ssh-keygen -R $1

ssh-keygen -R $1.alexware.deverteuil.net

ssh-keygen -R $(dig +short ${1}.alexware.deverteuil.net)

echo Shutting down $1.

virsh shutdown $1

until virsh list --state-shutoff --name | grep -q $1; do

echo Waiting for shutdown...

sleep 1

done

echo Deleting $1.

virsh undefine $1 --remove-all-storage

echo Removing host from Promteheus service discovery

ssh watchtower.alexware.deverteuil.net rm prometheus_file_sd_configs/${1}.yaml

echo Removing host from Ansible inventory

rm inventory vm-${1}

Youtube video

I have a recorded presentation from 2016 where I give an overview of libvirt and libguestfs and explain the tools they come with.

Conclusion

I’ve used this simple libvirt-based virtualisation environment in the past (see List of virtual machines, 2016, in French) and I liked it enough to go back to it with new hardware.

Right now, it hosts two permanent VMs:

- watchtower

- My observability platform based on Grafana, Prometheus and Loki.

- Runs Grafana, Prometheus, Loki, Alertmanager, PushGateway, blackbox_exporter Promtail and graphite_exporter.

- I’ll probably write a blog post about that later.

- lgtm-lab

- A lab environment with the Grafana Enterprise Stack, for work-related training and testing.

I currently have three ongoing testing and development projects:

- Pi-hole. I’m trying it out as a DNS-level ad blocker.

- A LAMP stack. Because I might want to migrate my web services from FreeBSD jails on TrueNAS to an Ubuntu VM.

- A host for testing Grafana Agent configs for a customer at Grafana Labs.